This article is a short guide written by designers for designers.

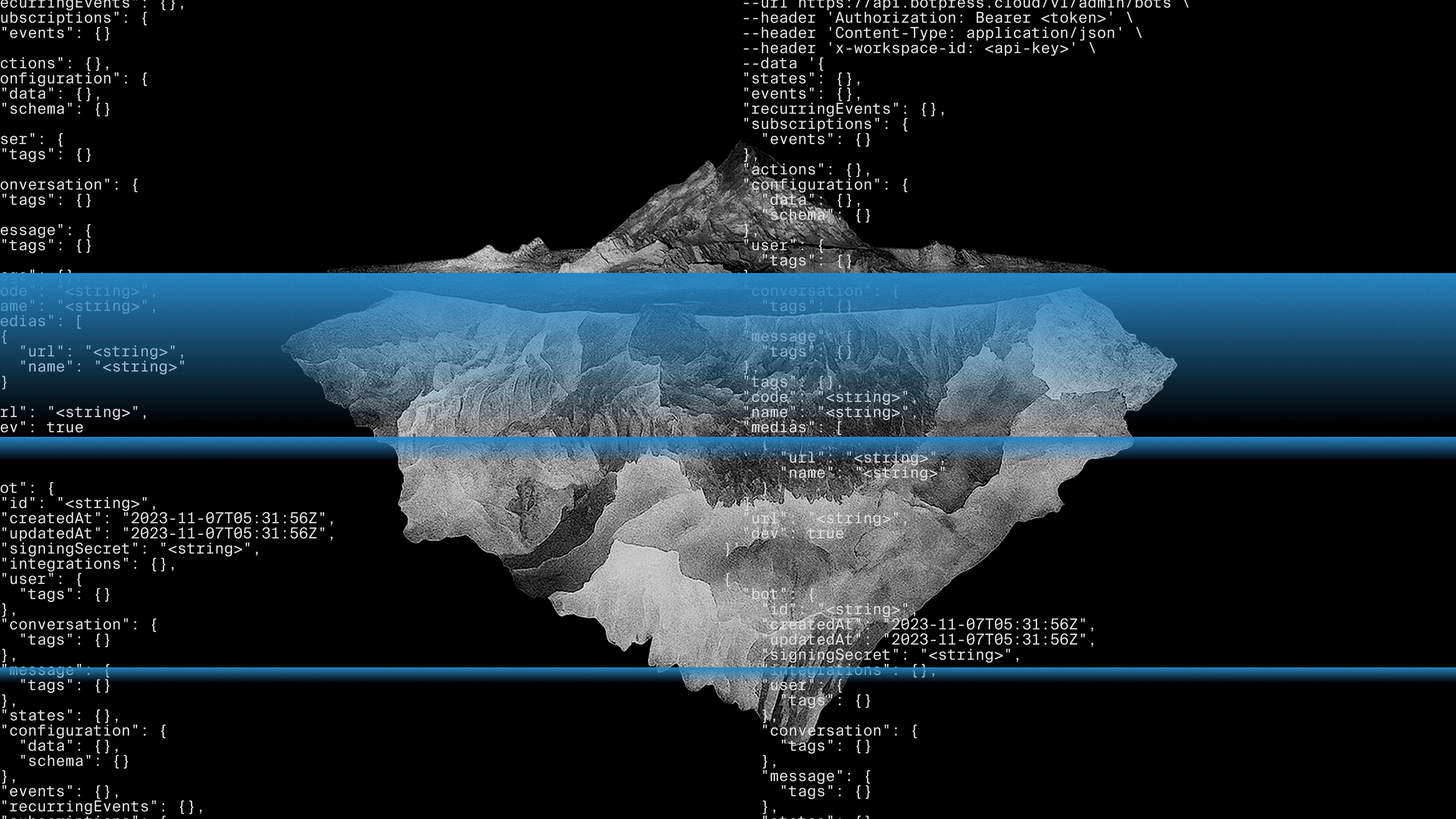

It’s not a technical piece or an academic paper, but an honest account of what we learned working on projects where generative AI and conversational agents weren’t just one tool among many, but the real core of the designed experience.

If you’re looking for a catchy quote to share on LinkedIn, this article might not be for you. Here we talk about hands-on design, the kind made of trials, mistakes, and constant iterations. It’s a story meant for those who, like us, are “in between”: between strategy and the final outcome, between vision and implementation.

Recently, we worked on two very different projects, both driven by the same goal: building a conversational AI assistant that is useful, accessible, and aligned with people’s real needs.

- Nexi – Alberto worked on a virtual customer care assistant. It was a 9-month project where, together with Nexi’s internal Design Studio, we analyzed different business areas to understand where generative AI could have the greatest impact on end users. After evaluating KPIs and ROI, customer support turned out to be the most promising field: the goal was to move from generic support to a tailor-made service, able to provide targeted and relevant answers.

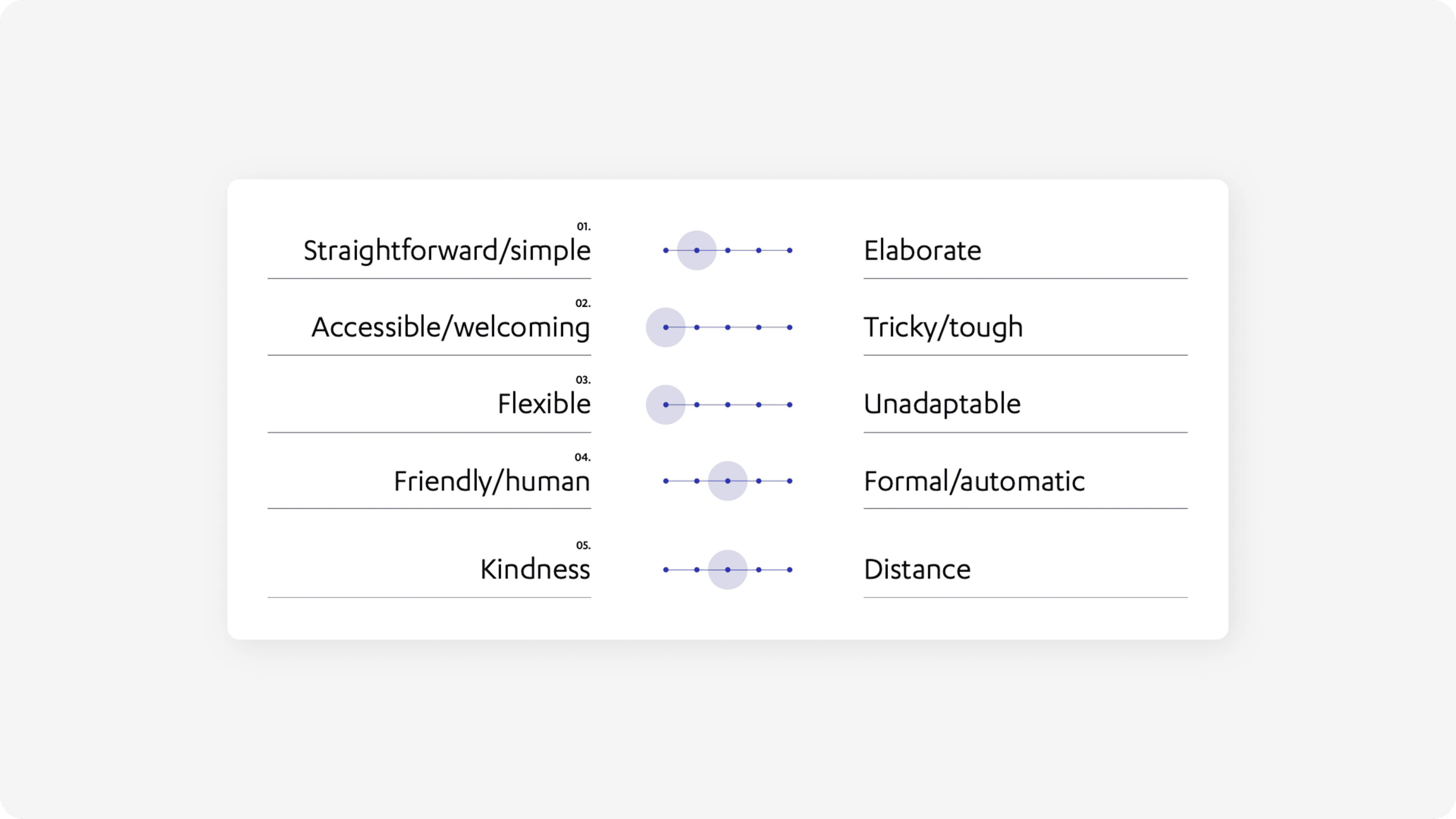

- Lenovo – Gea worked on LIA (Lenovo Intelligent Assistant), a 6-month project in the B2C retail context. We have been collaborating with Lenovo for many years on several initiatives, but this was an experimental stream, created to test the application of AI in retail spaces and understand what real value it could bring.

LIA is an AI assistant that simplifies product selection within a very broad catalog, adapting language and content to the user’s level of expertise. The experience combines a modular interface, light animations, and a distinctive physical presence in-store. The system integrates multiple layers (from data sources to frontend) and is built on an AI architecture able to understand user needs and return concise, technical, and personalized answers.

After explaining “how it’s done” too many times to colleagues and curious friends, we decided to gather what we learned into ten points and share it with others who, like us, are facing these challenges from scratch or almost. These are not absolute truths, but lessons born from attempts, successes, and above all mistakes. Maybe they’ll be useful for you too.