AIs are increasingly present in our everyday life and the exponential technologies are defining the boundaries of a mostly unknown land and we, as designers, are puzzling how to cope with this new scenario and, starting from our experience, we have found five rules that are helping us designing when there are AIs involved.

How do you design the right interaction between a man and artificial intelligence? But before that, what do we mean by AI? Let’s forget omniscient and malignant entities at AL9000, but more simply those we deal with every day: the navigator, the chatbots, the virtual assistants, the vacuum cleaner, the systems that suggest goods to buy or which series to watch in the evening…

They have been living with us for years, and as a studio, we have been working on expert and cognitive systems for years. During this time, we have developed a series of reflections on the subject.

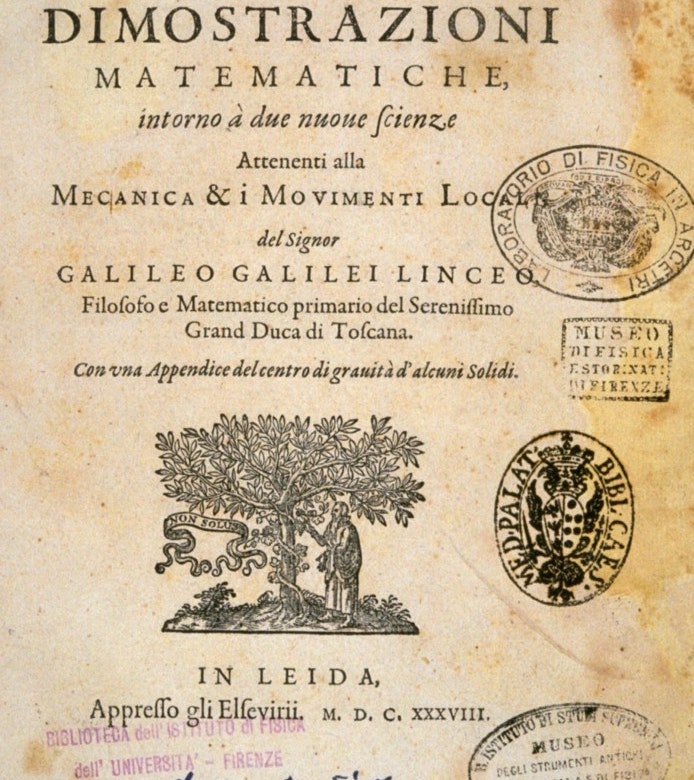

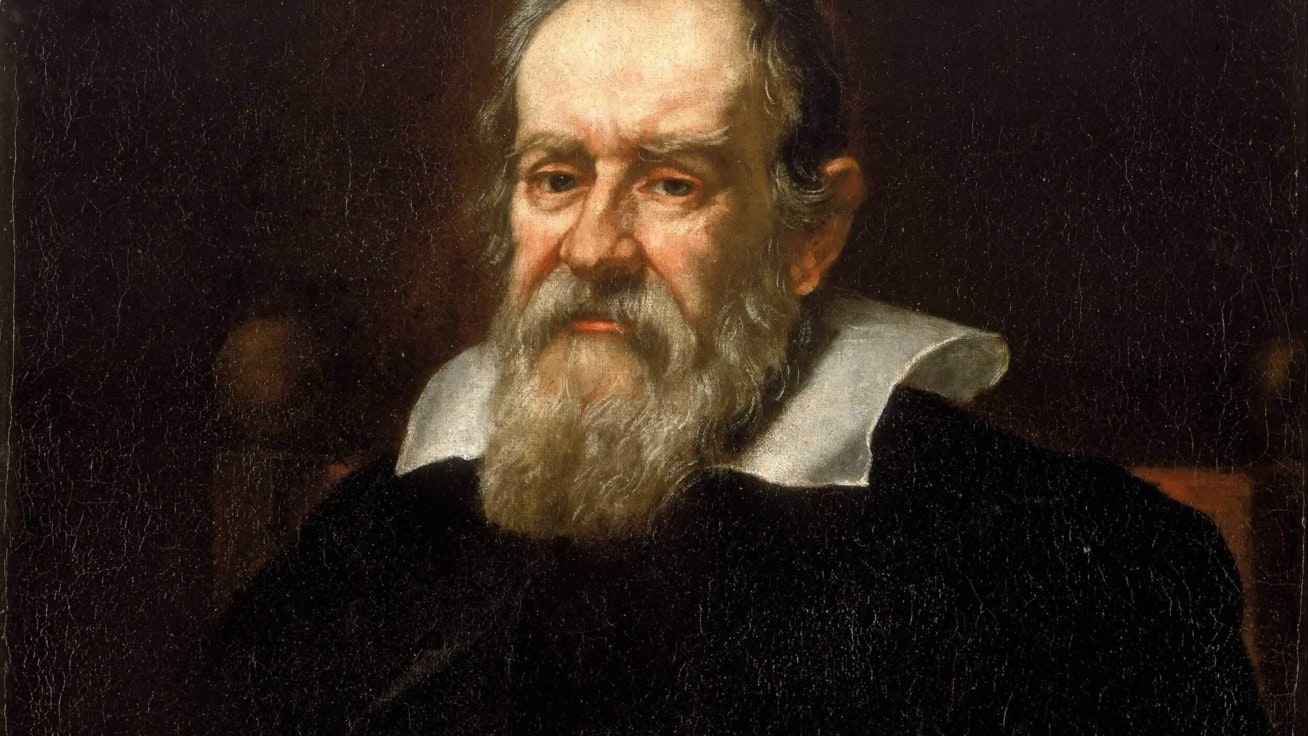

Our journey in search of this rules starts from a book, and a very important one since it states some of the axioms of modern physics such as the linear motion, the idea of inertia and translational momentum. It is the “Dimostrazioni matematiche intorno a due nuove scienze” written in 1638 by Galileo Galilei, the father of modern science.